An MCP (Model Context Protocol) Server is a crucial component of an open-standard protocol designed to bridge the gap between generative AI applications and various data sources, including Software as a Service (SaaS) platforms and APIs. Its primary function is to provide a standardized way for AI models to access and interact with external tools and data in real-time, enhancing their capabilities and ensuring the information they use is current and accurate.

Utilizing an MCP Server with Your SaaS and APIs

If you have a SaaS product with a set of APIs, you can leverage an MCP Server to allow your customers or internal users to interact with your service through generative AI models like ChatGPT or custom AI applications. Here’s how you can utilize it.

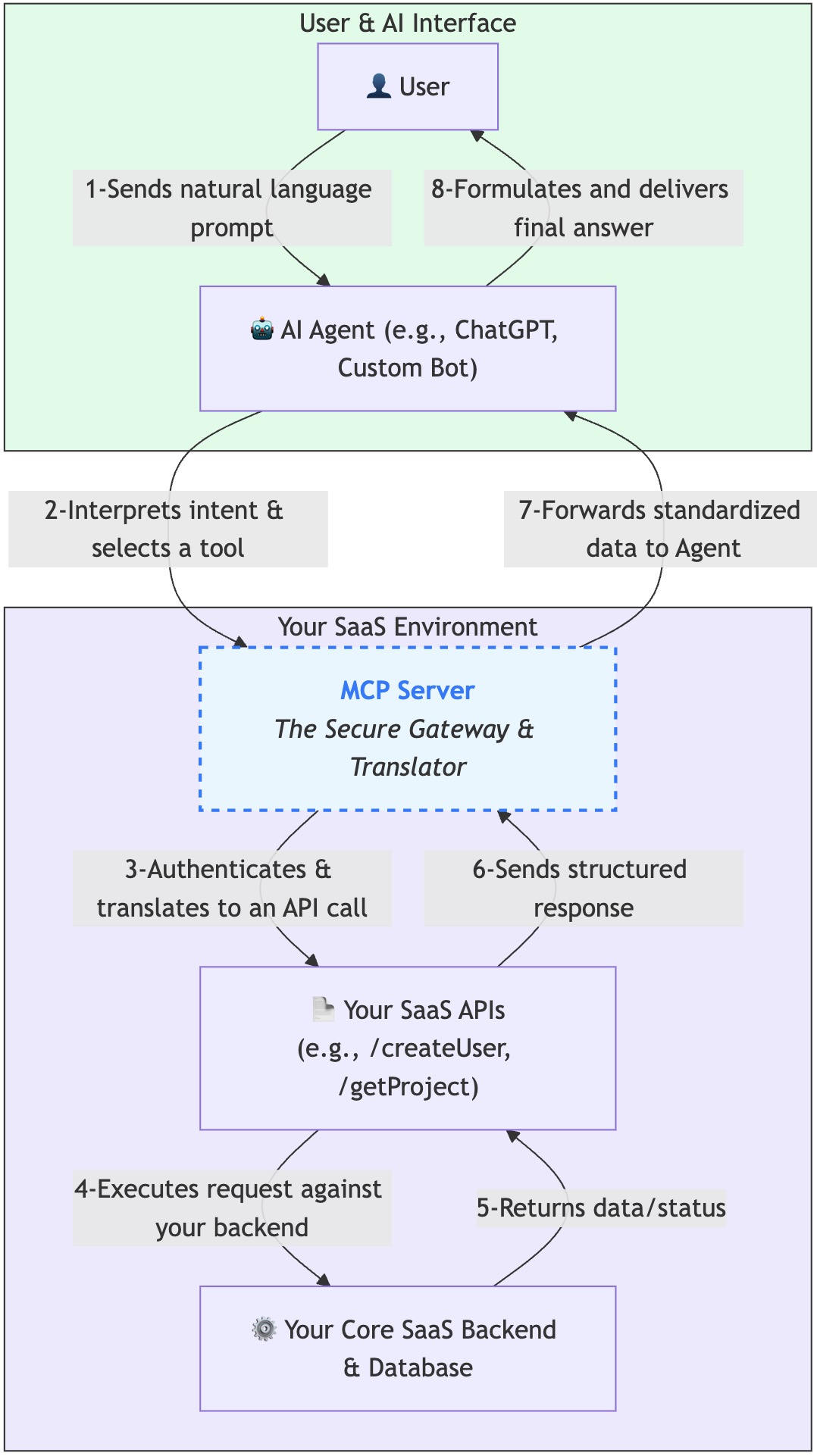

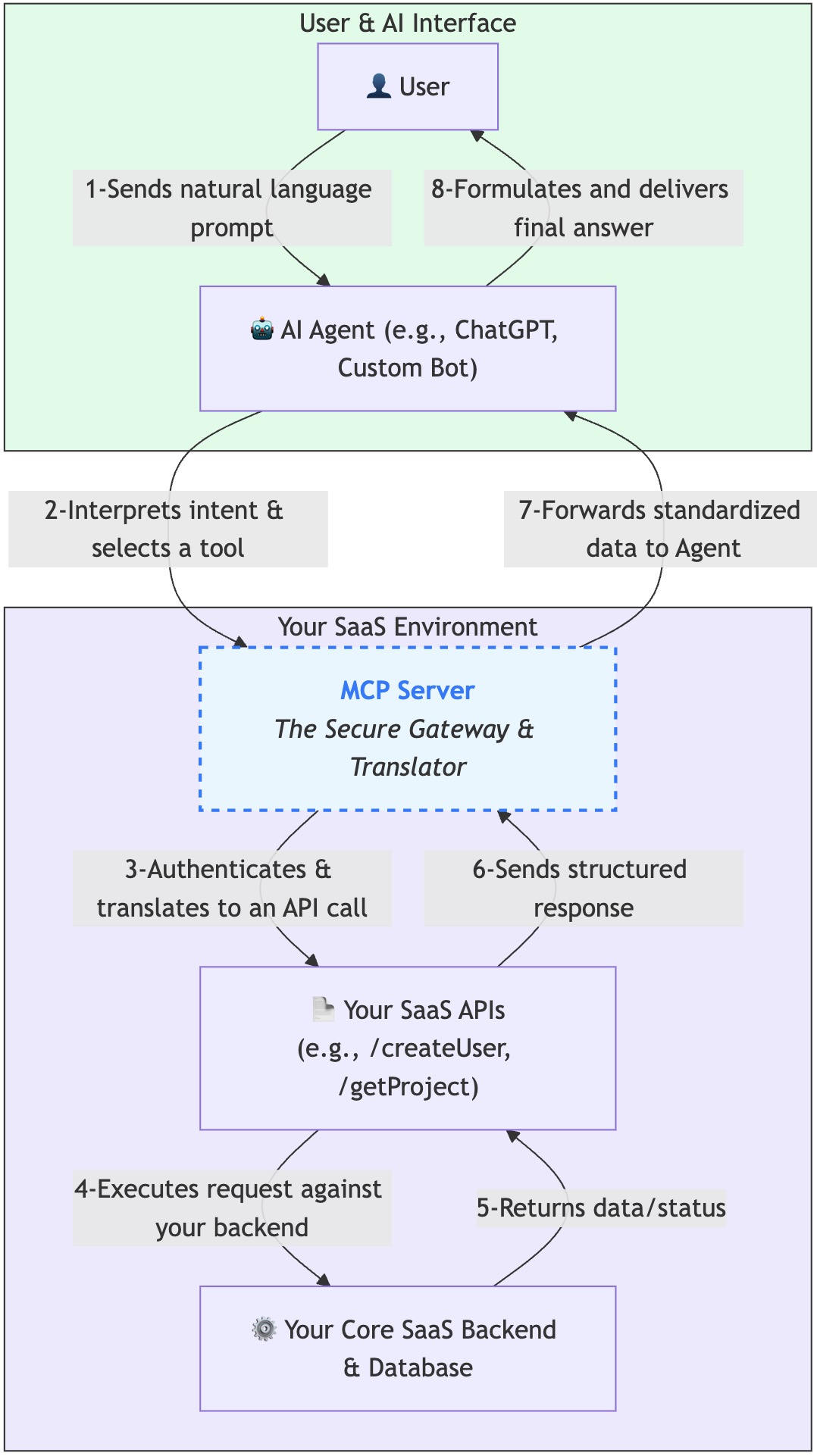

The MCP Server Architecture in a SaaS Environment

This chart illustrates the flow of a user request, starting from a natural language prompt to an AI Agent, and how the MCP Server acts as a secure bridge to your SaaS application’s backend.

How to Read the Chart:

- User Request: The process begins when a user gives a command in plain language (e.g., “Create a new report for this week”) to an AI Agent.

- AI Interpretation: The AI Agent understands the user’s goal and recognizes that it needs to use one of the available tools (e.g., a

create_report tool) to fulfill the request.

- The MCP Server’s Role: Instead of connecting directly to your SaaS, the AI Agent makes a standardized call to the MCP Server. This is the key step. The MCP Server acts as a secure entry point. It validates the request and translates the generic tool call into a specific, authorized API call that your SaaS can understand (e.g.,

POST /api/v1/reports).

- SaaS Execution: The MCP Server calls your existing SaaS API, which then communicates with your backend and database to perform the action (like creating the report).

- The Return Path: The result is sent back up the same chain, with the MCP Server ensuring the data is in a clean, standardized format that the AI Agent can easily use to give the user a final, coherent response.

Key Purposes and Benefits of an MCP Server:

- Real-Time Data Access: Unlike traditional methods that may rely on periodic data indexing, an MCP Server allows AI models to query databases and APIs in real-time. This ensures that the AI’s responses are based on the most up-to-date information available.

- Enhanced Security and Control: MCP Servers provide a secure gateway to your data. They allow for robust authentication and access control, ensuring that sensitive information is protected and only authorized users or models can access specific data or perform certain actions.

- Reduced Computational Load: By directly accessing data, MCP Servers can be more efficient than systems like Retrieval-Augmented Generation (RAG) that require creating and storing embeddings of data. This can lead to lower computational costs and faster response times.

- Standardized Integration: The Model Context Protocol offers a universal approach to connecting AI with various data sources. This simplifies the development process as you don’t need to build custom integrations for each new data source or AI model.

Connect your MCP Server to AI AGents and Workflows

Once your MCP Server is operational, the next step is to connect it to AI agents and automated workflows. This is where the true power of your integration comes to life. A highly effective and scalable approach is to build a custom node within a leading AI automation platform such as n8n, Flowise, or LangChain.

This custom node acts as a reusable, plug-and-play component within the workflow manager’s visual interface. It encapsulates all the necessary logic to communicate with your MCP Server, allowing you to easily trigger specific tools and data requests as steps in a larger automated process.

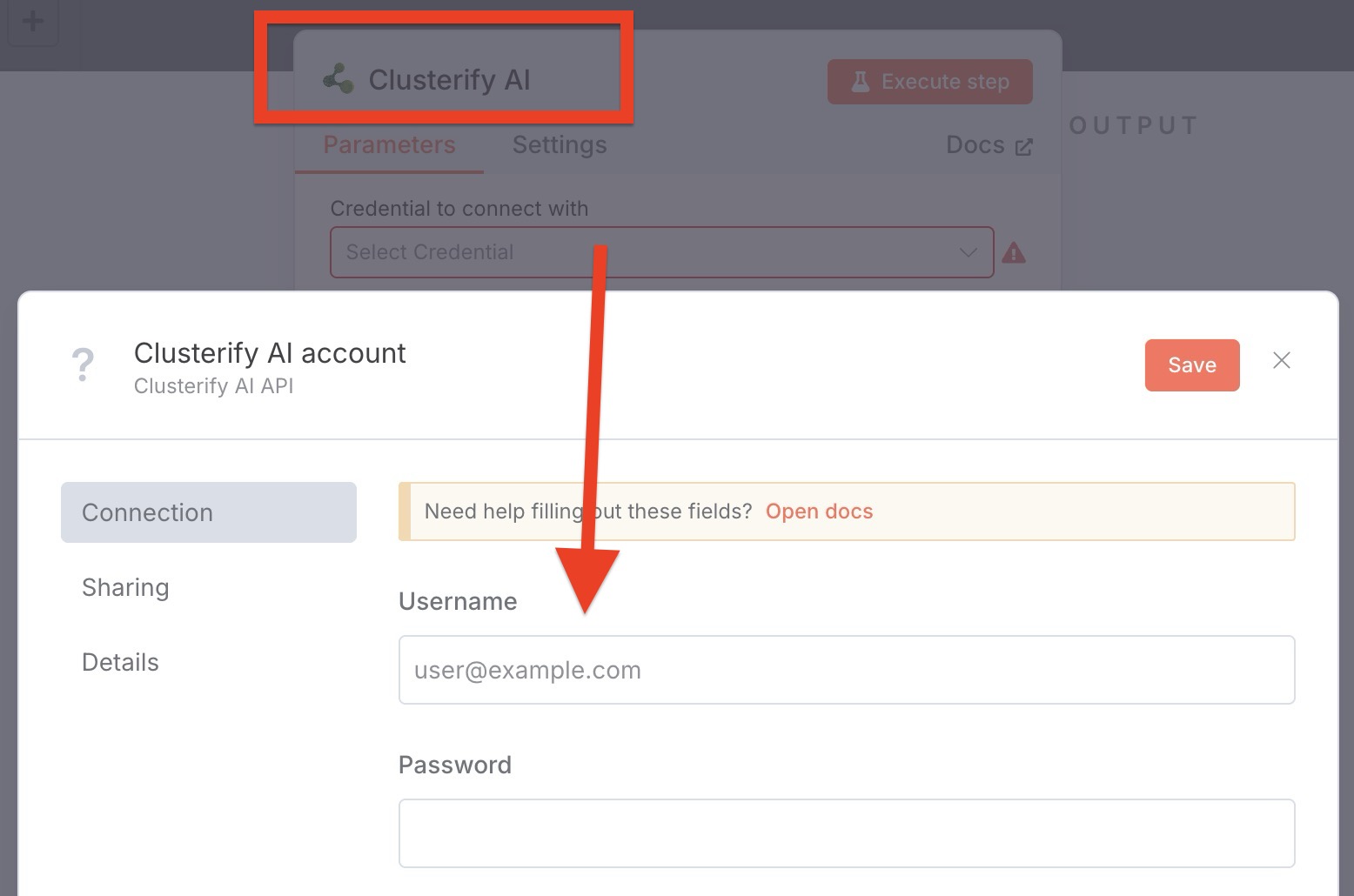

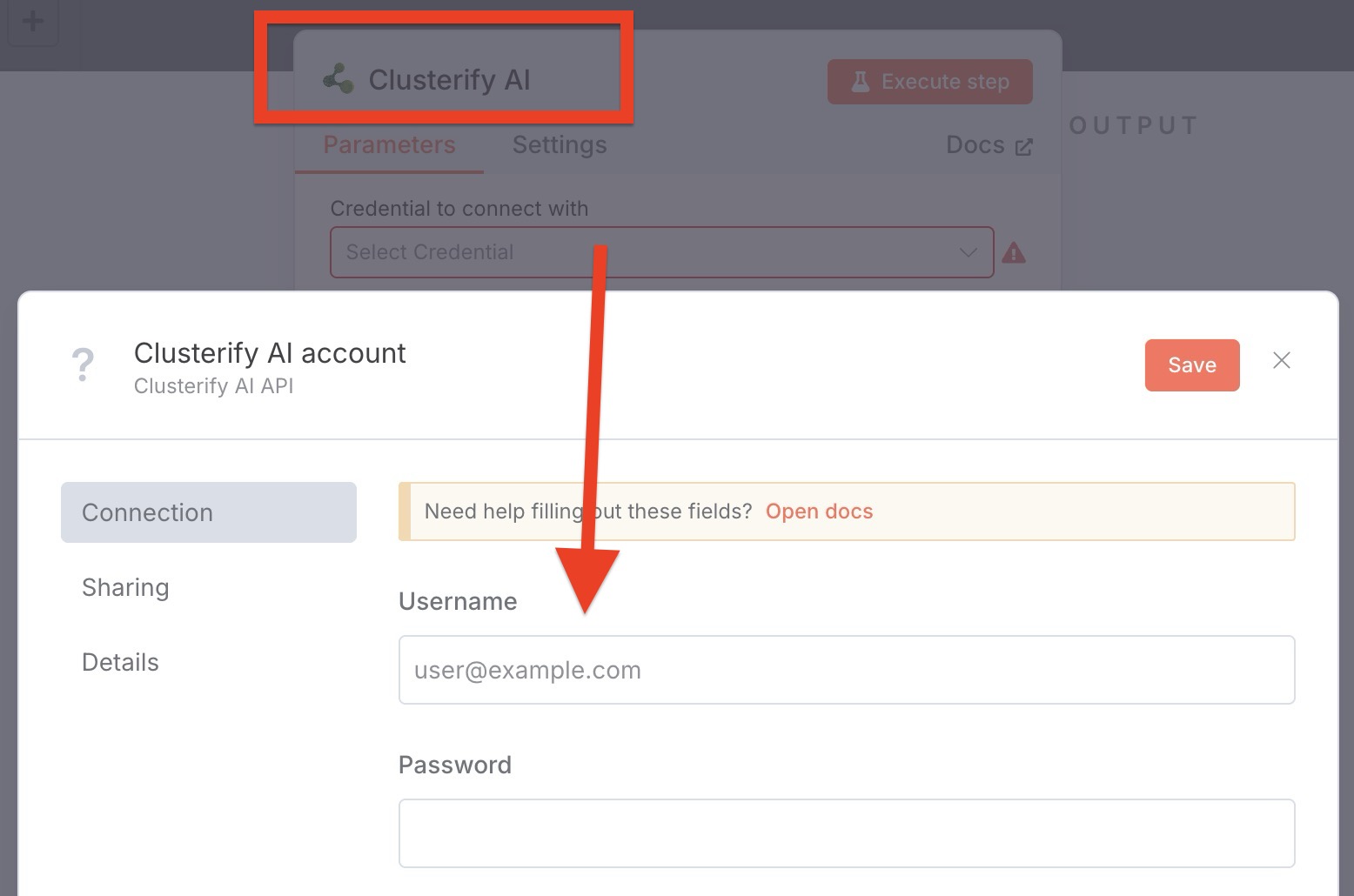

Handling Secure Authentication in Your Custom Node

A primary function of your custom node is to manage the authentication process securely. While the MCP Server itself provides a robust security layer for your SaaS, the node must present the proper credentials to gain access.

Your custom node can leverage the workflow platform’s built-in credential management system to securely store and utilize the necessary API keys or tokens. As illustrated below, this allows an authenticated connection to be established seamlessly and securely, enabling the AI Agent and its workflow to utilize your MCP Server’s resources immediately.

A Critical Recommendation: Self-Hosting for Security and Control

For any serious enterprise application, especially for businesses here in the Frisco tech corridor, I strongly recommend self-hosting your chosen workflow automation platform.

While cloud-hosted options offer convenience, self-hosting provides unparalleled advantages for this type of integration:

- Data Sovereignty & Compliance: You maintain complete control over your data, ensuring sensitive information never leaves your private infrastructure. This is critical for meeting compliance standards like HIPAA, GDPR, or SOC 2.

- Enhanced Security: You control the entire security stack, from network access to infrastructure hardening, eliminating the risks associated with multi-tenant cloud environments.

- Full Autonomy: Gain full control over versioning, upgrades, and custom development cycles without being subject to a third-party vendor’s roadmap or potential service disruptions.

Exposing Your SaaS Functionality as “Tools”

The core of using an MCP Server with your SaaS is to expose your existing API endpoints as “tools” that an AI model can understand and use.

- Define Your Tools: Identify the key functionalities of your SaaS that would be valuable for an AI to access. These could be actions like:

- Retrieving customer data.

- Creating a new project or task.

- Sending a notification.

- Querying your database for specific information.

- Map to API Calls: Each “tool” you define will correspond to one or more of your existing API calls. For example, a “create_new_user” tool would map to the

POST /users endpoint in your API.

Building and Deploying Your MCP Server

You will need to build an MCP Server that acts as an intermediary between the AI model and your SaaS APIs.

- Choose a Framework: There are various open-source libraries and frameworks available in languages like Python, Node.js, and Java to help you build an MCP Server. These frameworks handle the complexities of the MCP protocol.

- Implement Tool Logic: Within your MCP Server, you’ll write the logic that translates a tool request from the AI model into the corresponding API call to your SaaS. This includes handling authentication with your API, formatting the request, and processing the response.

- Authentication and Authorization: Implement robust security measures. Your MCP Server should securely store and use API keys or OAuth tokens to interact with your SaaS API on behalf of the user. It should also enforce permissions to control which users or AI models can use specific tools.

- Deployment: You can deploy your MCP Server in a variety of environments, including on-premises servers or cloud platforms like AWS, Google Cloud, or Azure.

Connecting to AI Applications

Once your MCP Server is running, you can connect it to AI applications.

- AI Model Configuration: You will need to configure the AI model (e.g., in a custom application or through a platform like OpenAI’s GPT store) with the details of your MCP Server. This allows the model to “discover” the tools your server exposes.

- Natural Language Interaction: Users can then interact with your SaaS through natural language prompts. For example, a user could type, “Create a new project in my SaaS called ‘Q3 Marketing Campaign’,” and the AI model would understand the intent, call the appropriate tool on your MCP Server, which in turn would make the corresponding API call to your SaaS platform.

By implementing an MCP Server, you can significantly enhance the accessibility and usability of your SaaS, allowing for powerful new workflows and integrations with the rapidly evolving ecosystem of generative AI.